To create a truly immersive experience VR creators must understand how an object moves in a space. So how do we track and simulate complex movements in a 3D environment? How do we take what we see in the real world and apply it to VR to “trick” our brains? VR image resolution has a long way to go to match our eyesight but we do a pretty good job with motion. This motion is typically discussed in terms of Degrees of Freedom (DOF). It turns out motion can be broken down into two types: Rotational and Translational – with each representing 3 DOF.

Rotational Movement

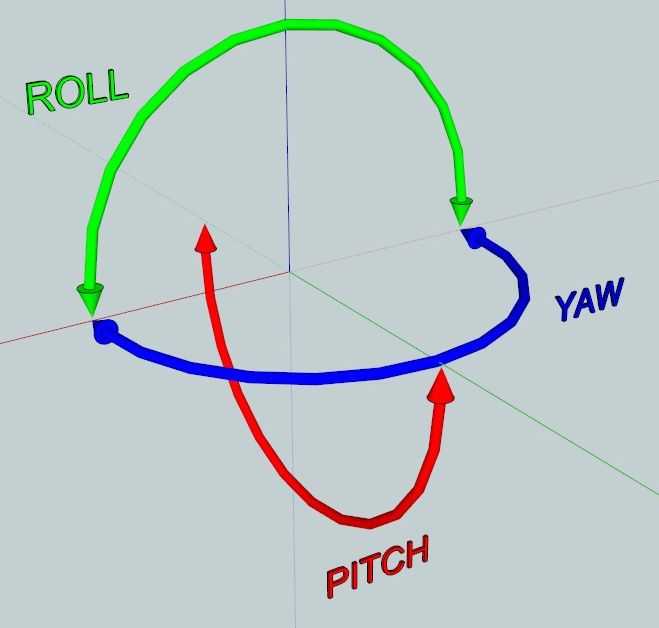

Objects in a 3D space can rotate with three DOF: pitch, yaw, and roll. Think of a helicopter hovering above you. It can move its nose up and down (pitch), turn to face left or right (yaw), and tip side to side (roll).

Translational Movement

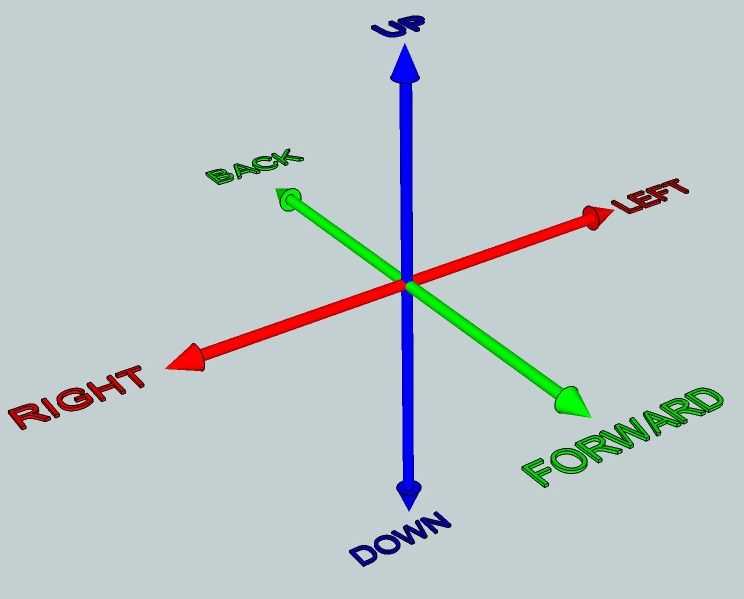

An object can translate (move from one place to another) in 3 ways: forward/back, up/down, and left/right. Any movement can be expressed with a combination of these DOF. Most high end VR systems

like the HTC Vive and Oculus Rift allow you to move with all six DOF.

How this tracking is accomplished?

VR headsets use IMUs(Inertial Measurement Unit) to track position and movement. An IMU is a tiny sensor containing an accelerometer, magnetometer, and gyroscope used to measure velocity, gravitational force, and orientation. IMUs are responsible for measuring the 3 rotational DOF – pitch, yaw, and roll.

Translational DOF is most often measured using cameras and motion sensors placed around the person, with on-device capability on its way.